Update dependency torch to >=2.10,<3 [SECURITY]#151

Open

renovate[bot] wants to merge 1 commit intomasterfrom

Open

Update dependency torch to >=2.10,<3 [SECURITY]#151renovate[bot] wants to merge 1 commit intomasterfrom

renovate[bot] wants to merge 1 commit intomasterfrom

Conversation

f59718f to

18d5c06

Compare

18d5c06 to

ad272b1

Compare

ad272b1 to

62556a3

Compare

62556a3 to

88db8b7

Compare

88db8b7 to

ccdfbfc

Compare

ccdfbfc to

4a1d578

Compare

4a1d578 to

c95af66

Compare

c95af66 to

78e6998

Compare

78e6998 to

60ddedd

Compare

60ddedd to

22d99e7

Compare

f846654 to

e3e5459

Compare

e3e5459 to

e79ce30

Compare

e79ce30 to

2e2cbe3

Compare

2e2cbe3 to

a6dd4f3

Compare

a6dd4f3 to

2ae229d

Compare

2ae229d to

bb0933a

Compare

bb0933a to

8056c30

Compare

8056c30 to

c43dd7c

Compare

c43dd7c to

82b0e9d

Compare

82b0e9d to

52981a4

Compare

52981a4 to

567f83e

Compare

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.This suggestion is invalid because no changes were made to the code.Suggestions cannot be applied while the pull request is closed.Suggestions cannot be applied while viewing a subset of changes.Only one suggestion per line can be applied in a batch.Add this suggestion to a batch that can be applied as a single commit.Applying suggestions on deleted lines is not supported.You must change the existing code in this line in order to create a valid suggestion.Outdated suggestions cannot be applied.This suggestion has been applied or marked resolved.Suggestions cannot be applied from pending reviews.Suggestions cannot be applied on multi-line comments.Suggestions cannot be applied while the pull request is queued to merge.Suggestion cannot be applied right now. Please check back later.

This PR contains the following updates:

>=1.2,<2.1→>=2.10,<3GitHub Vulnerability Alerts

CVE-2024-31583

Pytorch before version v2.2.0 was discovered to contain a use-after-free vulnerability in torch/csrc/jit/mobile/interpreter.cpp.

CVE-2024-31580

PyTorch before v2.2.0 was discovered to contain a heap buffer overflow vulnerability in the component /runtime/vararg_functions.cpp. This vulnerability allows attackers to cause a Denial of Service (DoS) via a crafted input.

CVE-2025-2953

A vulnerability, which was classified as problematic, has been found in PyTorch 2.6.0+cu124. Affected by this issue is the function torch.mkldnn_max_pool2d. The manipulation leads to denial of service. An attack has to be approached locally. The exploit has been disclosed to the public and may be used.

CVE-2025-3730

A vulnerability, which was classified as problematic, was found in PyTorch 2.6.0. Affected is the function torch.nn.functional.ctc_loss of the file aten/src/ATen/native/LossCTC.cpp. The manipulation leads to denial of service. An attack has to be approached locally. The exploit has been disclosed to the public and may be used. The name of the patch is 46fc5d8e360127361211cb237d5f9eef0223e567. It is recommended to apply a patch to fix this issue.

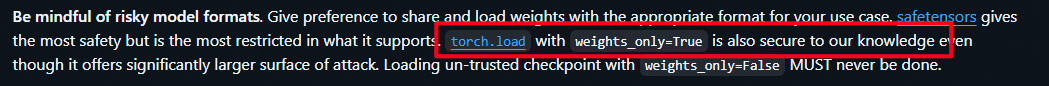

CVE-2025-32434

Description

I found a Remote Command Execution (RCE) vulnerability in PyTorch. When loading model using torch.load with weights_only=True, it can still achieve RCE.

Background knowledge

https://github.com/pytorch/pytorch/security

As you can see, the PyTorch official documentation considers using

torch.load()withweights_only=Trueto be safe.Since everyone knows that weights_only=False is unsafe, so they will use the weights_only=True to mitigate the seucirty issue.

But now, I just proved that even if you use weights_only=True, it can still achieve RCE.

Credit

This vulnerability was found by Ji'an Zhou.

Release Notes

pytorch/pytorch (torch)

v2.10.0: PyTorch 2.10.0 ReleaseCompare Source

PyTorch 2.10.0 Release Notes

Highlights

torch.compile(). Python 3.14t (freethreaded build) is experimentally supported as well.torch.compile()now respects use_deterministic_modeFor more details about these highlighted features, you can look at the release blogpost. Below are the full release notes for this release.

Backwards Incompatible Changes

Dataloader Frontend

data_sourceargument from Sampler (#163134). This is a no-op, unless you have a custom sampler that uses this argument. Please update your custom sampler accordingly.from torch.utils.data.datapipes.iter.grouping import SHARDING_PRIORITIES, ShardingFilterIterDataPipeis no longer supported. Please import fromtorch.utils.data.datapipes.iter.shardinginstead.torch.nn

nn.attention.flex_attention(#161734)ONNX

fallback=Falseis now the default intorch.onnx.export(#162726)dynamo=Trueoption without fallback. This is the recommended way to use the ONNX exporter. To preserve 2.9 behavior, manually setfallback=Truein thetorch.onnx.exportcall.Release Engineering

Deprecations

Distributed

We decided to deprecate an existing behavior which goes against the PyTorch design principle (explicit over implicit) for device mesh slicing of flattened dim.

Version <2.9

Version >=2.10

Ahead-Of-Time Inductor (AOTI)

from/tototorch::stable::detail(#164956)JIT

torch.jitis not guaranteed to work in Python 3.14. Deprecation warnings have been added to user-facingtorch.jitAPI (#167669).torch.jitshould be replaced withtorch.compileortorch.export.ONNX

dynamic_axesoption intorch.onnx.exportis deprecated (#165769)Users should supply the

dynamic_shapesargument instead. See https://docs.pytorch.org/docs/stable/export.html#expressing-dynamism for more documentation.Profiler

export_memory_timelinemethod (#168036)The

export_memory_timelinemethod intorch.profileris being deprecated in favor of the newer memory snapshot API (torch.cuda.memory._record_memory_historyandtorch.cuda.memory._export_memory_snapshot). This change adds the deprecated decorator fromtyping_extensionsand updates the docstring to guide users to the recommended alternative.New Features

Autograd

torch.utils.checkpoint.checkpoint(#166536)Complex Frontend

ComplexTensorsubclass (#167621)Composability

cuDNN

Distributed

LocalTensor:

LocalTensoris a powerful debugging and simulation tool in PyTorch's distributed tensor ecosystem. It allows you to simulate distributed tensor computations across multiple SPMD (Single Program, Multiple Data) ranks on a single process. This is incredibly valuable for: 1) debugging distributed code without spinning up multiple processes; 2) understanding DTensor behavior by inspecting per-rank tensor states; 3) testing DTensor operations with uneven sharding across ranks; 4) rapid prototyping of distributed algorithms. Note that LocalTensor is designed for debugging purposes only. It has significant overhead and is not suitable for production distributed training.LocalTensoris atorch.Tensorsubclass that internally holds a mapping from rank IDs to local tensor shards. When you perform a PyTorch operation on aLocalTensor, the operation is applied independently to each local shard, mimicking distributed computation (LocalTensorsimulates collective operations locally without actual network communication.).LocalTensorModeis the context manager that enablesLocalTensordispatch. It intercepts PyTorch operations and routes them appropriately. The@maybe_run_for_local_tensordecorator is essential for handling rank-specific logic when implementing distributed code.LocalTensor, users import fromtorch.distributed._local_tensor, initialize a fake process group, and wrap their distributed code in aLocalTensorModecontext. Within this context, DTensor operations automatically produce LocalTensors.c10d:

shrink_groupimplementation to exposencclCommShrinkAPI (#164518)Dynamo

torch.compilenow fully works in Python 3.14 (#167384)skip_fwd_side_effects_in_bwd_under_checkpoint) to allow eager and compile activation-checkpointing divergence for side-effects (#165775)torch._higher_order_ops.printfor enabling printing without graph breaks or reordering (#167571)FX

Added node metadata annotation API

Disable preservation of node metadata when

enable=False(#164772)Annotation should be mapped across submod (#165202)

Annotate bw nodes before eliminate dead code (#165782)

Add logging for debugging annotation (#165797)

Override metadata on regenerated node in functional mode (#166200)

Skip copying custom meta for gradient accumulation nodes; tag with is_gradient_acc=True (#167572)

Add metadata hook for all nodes created in runtime_assert pass (#169497)

Update

gm.print_readableto include Annotation (#165397)Add annotation to assertion nodes in export (#167171)

Add debug mode to print meta in fx graphs (#165874)

Inductor

torch.compiler.config.force_disable_cachesas a public API. (#166699)Ahead-Of-Time Inductor (AOTI)

MPS

(#162349, #162349, #162007, #162910, #162885, #163011, #163694, #164961, #165102, #166708, #166711, #167013, #169125, #165232, #166708, #168154, #169368, #167908, #168112)

torch.nn

nn.functional.scaled_mm(#164142)nn.functional.scaled_grouped_mm(#165154)nn.attention.varlen_attn(#164502, #164504)nn.functional.grouped_mm(#168298)ONNX

torch.onnx.testingwith a testing utilityassert_onnx_program(#162495)Profiler

RecordFunctionFast(#162661)Quantization

Add

_scaled_mm_v2API (#164141)Add

scaled_grouped_mm_v2and python API (#165154)Add

embedding_bag_byte_prepack_with_rowwise_min_maxandembedding_bag_{2/4}bit_prepack_with_rowwise_min_max(#162924)Add

MXFP4support for_scaled_grouped_mm_v2via. FBGEMM kernels (#166530)Release Engineering

ROCm

XPU

scaled_mmandscaled_mm_v2for Intel GPU (#166056)_weight_int8pack_mmfor Intel GPU (#160938)torch.xpu.get_per_process_memory_fractionfor Intel GPU (#165511)torch.xpu.set_per_process_memory_fractionfor Intel GPU (#165510)torch.xpu.is_tf32_supportedfor Intel GPU (#163141)torch.xpu.can_device_access_peerfor Intel GPU (#162705)torch.accelerator.get_memory_infofor Intel GPU (#162564)Improvements

Build Frontend

Composability

torch.compile(backend="aot_eager")backend, it should now give bitwise equivalent results in eager. Previously it sometimes would not due to extra compile-only decompositions running (#165910)torch._checkovertorch._check_is_size(#164889,C++ Frontend

TORCH_CHECK_{COND}behavior to be non-fatal (#167004)TypeTraits,TypeList,Metaprogramming,DeviceType,MemoryFormat,Layout,version.h, andCppTypeToScalarTypetotorch::headeronly(#167386, #163999, #168034, #165153, #164381, #167610)libfmtsubmodule version to12.0.0(#163441)CUDA

torch.cuda.rng_set_stateandtorch.cuda.rng_get_statework in CUDA graph capture. (#162505)header_codeargument (#163165)launch_logcumsumexp_cuda_kernel(#164567)per_process_memory_fractionoption toPYTORCH_CUDA_ALLOC_CONF(#161035)Distributed

c10d

Context Parallel

parallelize_module(#162542)flex_cp_forwardcustom op for FlexAttention CP (#163185)_templated_ring_attentionto the backward compatility stub (#166991)_LoadBalancerclasses, and load-balance interface to Context Parallel APIs with process-time based Round-Robin load-balance (#161062, #163617)DeviceMesh

_unflattenon top of CuTe layout bookkeeping (#161224, #165521)_rankfor use with non-global PGs (#162439)FullyShardDataParallel (FSDP1 and FSDP2)

reset_sharded_param: no-op if _local_tensor is already padded (#163130)DTensor

_foreach_pow,logsumexpandmasked_fill_.Scalarto sharding propagation list. (#162895, #163879, #169668)SymmetricMemory

multimem_one_shot_reduce_out(#164517)multi_root_tile_reduce(#162243, #164757)symm_mem_syncTriton kernel totorch.ops.symm_mem(#168917)set_signal_pad_sizeAPI for SymmetricMemory (#169156)Pipeline Parallelism

PipelineScheduleRuntime(#164777)OVERLAP_F_Bin schedule (#161072)torchelastic

TailLog(#167169)Dynamo

Turn on

capture_scalar_outputsandcapture_dynamic_output_shape_opswhenfullgraph=True(#163121, #163123)Improved tracing for

dictkey hashing (#169204)Tracing support for

torch.cuda.stream(#166472)Improved tracing of

torch.autograd.Functions (#166788)Miscellaneous smaller tracing support additions:

Extend

collections.defaultdictsupport with*args,**kwargsand customdefault_factory(#166793)Support for bitwise xor (#166065)

Support

repron user-defined objects (#167372)Support new typing union syntax

X | Y(#166599)Export

FX

Inductor

torch._inductor.config.combo_kernels(#162442) (#166274) (#162759) (#167781) (#168127) (#168946) (#168109) (#164918)qconv_pointwise.tensorandqconv2d_pointwise.binary_tensorquantized operations. (#166608)out_dtypeargument for matmul operations. (#163393)fast_tanhfon ROCm. (#162052)fallback_embedding_bag_byte_unpack. (#163803)pad_mmanddecompose_mm_passpass on Intel GPU. (#166618) (#166613)native_layer_norm_backwardmeta function. (#159830)assume_32bit_indexinginductor config option. (#167784)MPS

embedding_bagoperator (#163012, #163931, #163281)embedding_bagandindex_selectops (#166615, #168930, #166468)Nested Tensor (NJT)

share_memory_(#162272)torch.nn

scaled_dot_product_attention(#166318)BlockMaskinnn.functional.flex_attention(#164702)ONNX

tofile()in ONNX IR tensors for more efficient ONNX model serialization (#165195)Optimizer

Adam,AdamWwork with nonzero-dim Tensor betas (#149939)Profiler

user_metadatadisplay to memory visualizer (#165939)Python Frontend

torch.libraryand custom ops to support view functions (#164520)generatorarg torand*_likeAPIs (#166160)Quantization

halfandbf16support forfused_moving_avg_obs_fake_quant(#162620, #164175)bf16support forfake_quantize_learnable_per_channel_affine(#165098)bf16support for backward oftorch._fake_quantize_learnable_per_tensor_affine(#165362)NVFP4two-level scaling toscaled_mm(#165774)fp8_input/fp8_weight/bf16_biasandbf16_outputfor fp8 qconv in CPU (#167611)torch.float4_e2m1fn_x2dtype support equality comparisons (#169575)copy_support fortorch.float4_e2m1fn_x2dtype (#169595)Release Engineering

Configuration

📅 Schedule: Branch creation - "" (UTC), Automerge - At any time (no schedule defined).

🚦 Automerge: Disabled by config. Please merge this manually once you are satisfied.

♻ Rebasing: Whenever PR becomes conflicted, or you tick the rebase/retry checkbox.

🔕 Ignore: Close this PR and you won't be reminded about this update again.

This PR was generated by Mend Renovate. View the repository job log.